How to Bypass AI Detection: Reddit’s Top Tips for 2025

Imagine this: you’ve spent hours leveraging AI to draft a brilliant article, only to have it flagged by GPTZero or Originality.ai. If you’ve ever searched how to bypass AI detection on Reddit, you’re not alone — creators everywhere are trying to sound more human without losing their voice.

For content creators, marketers, and students, this scenario is a growing source of anxiety. Being flagged by GPTZero, Originality.ai, or Turnitin can mean anything from a tanking SEO ranking to a failing grade. But what if the goal wasn’t just to “trick” the software, but to create content so good, so human, that it becomes genuinely undetectable? This guide distills the best community-vetted strategies from Reddit to help you do exactly that.

How AI Detection Works — And How to Bypass It?

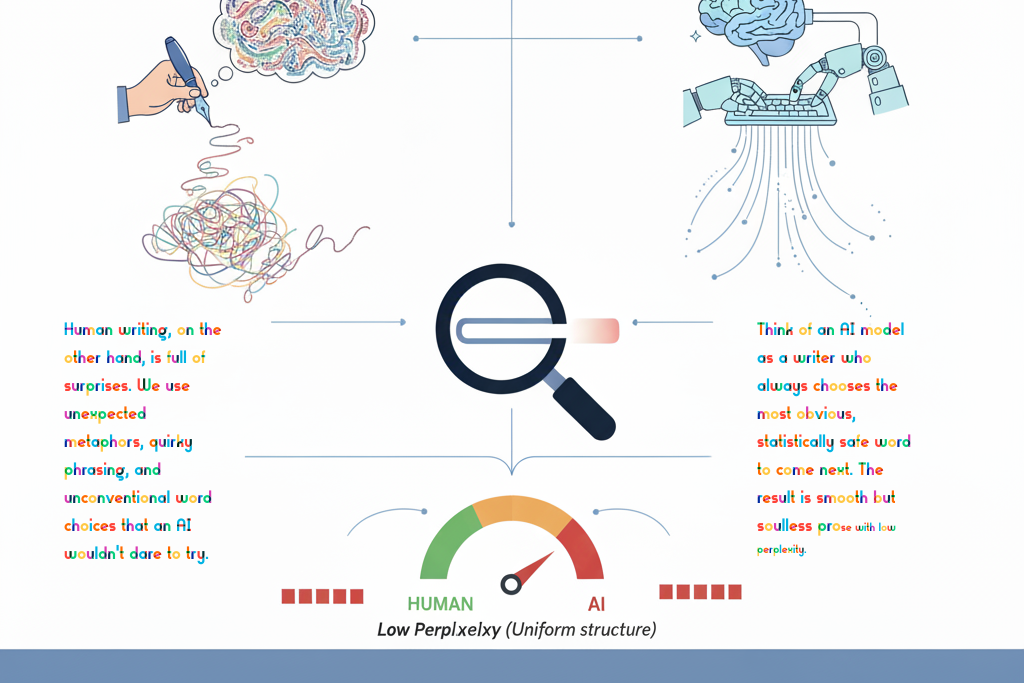

To outsmart the machine, you first need to understand how it thinks. AI detectors aren’t casting spells; they’re sophisticated pattern-recognition systems looking for the tell-tale fingerprints of machine-generated text. They primarily focus on two key metrics: perplexity and burstiness.

Perplexity is a fancy term for predictability. Think of an AI model as a writer who always chooses the most obvious, statistically safe word to come next. The result is smooth but soulless prose with low perplexity. Human writing, on the other hand, is full of surprises. We use unexpected metaphors, quirky phrasing, and unconventional word choices that an AI wouldn’t dare to try. This creative chaos results in higher perplexity.

Burstiness is all about rhythm. Listen to how a person speaks. They mix long, flowing sentences with short, punchy ones. This variation in sentence length and structure creates a natural cadence. AI-generated content often lacks this dynamic, producing sentences of uniform length that feel monotonous, like a metronome ticking away without any soul.

These detectors use machine learning models trained on millions of examples of human and AI text. But here’s the critical flaw: many forms of legitimate human writing—like technical manuals, legal documents, or even academic papers by non-native English speakers—naturally have low perplexity and burstiness. This means AI detectors are often biased against these styles. Humanizing your content isn’t just about evasion; it’s a necessary defense against imperfect technology.

Why Bypassing AI Detection Helps Your SEO and E-E-A-T

The quest to “humanize” AI-generated text is much more than a cat-and-mouse game with detectors. It’s one of the most powerful SEO strategies you can adopt today. The very qualities that make your content feel human are precisely what search engines like Google are desperate to find.

This directly aligns with Google’s E-E-A-T (Experience, Expertise, Authoritativeness, and Trustworthiness) guidelines. Google’s algorithms are designed to reward helpful content that feels real. When you add personal anecdotes, real-world examples, and a distinct authorial voice, you’re not just bypassing a detector; you’re signaling immense value to Google. Unedited, generic AI slop is the digital equivalent of junk food—it gets ignored and fails to rank.

More importantly, authentic content forges a real connection with your audience. Robotic text kills engagement, leading to high bounce rates because it fails to build trust. A conversational, empathetic tone makes your readers feel seen and understood, fostering the kind of loyalty that turns visitors into a community. Ultimately, humanizing is how you define and protect your unique brand voice—something no generic AI can ever truly replicate.

Method 1: The Artisan’s Approach—Manually Rewriting AI Text

For truly exceptional content that is both high-quality and undetectable, nothing beats the human touch. Think of yourself as a master chef transforming a store-bought sauce into a gourmet creation. This process is about fundamentally reshaping the AI’s draft into something with personality and flair. Here’s how to do it:

Become a Sentence Architect: AI often churns out long, uniform paragraphs. Your first job is demolition and reconstruction. Vary your sentence lengths. Mix a long, descriptive sentence with a short, punchy one. Start your sentences in different ways to break the monotonous subject-verb-object pattern.

Inject Your Unique Experience: This is your secret weapon. Weave in a short personal story, a relevant case study, or even a clever analogy. This doesn’t just make the content more engaging; it’s a direct injection of the “Experience” that Google’s E-E-A-T guidelines reward.

Write Like You Talk: Ditch the formality. Use contractions (like “it’s” and “you’re”), ask rhetorical questions, and use common idioms. Reading your draft as if you were explaining the concept to a friend is a great way to make the tone more personal and less robotic.

Hunt Down AI Buzzwords: AI has its favorite crutches. Search and destroy robotic transition phrases like “Moreover,” “Furthermore,” “In conclusion,” and the dreaded “It is important to note that.” Replace them with more natural segues, or better yet, let your ideas flow so smoothly they don’t need a signpost.

The Read-Aloud Test: This is the final, non-negotiable step. Read your entire article out loud. If a sentence makes you stumble or sounds like something a robot would say on a sci-fi show, rewrite it. If it sounds unnatural to your ear, it will scream “AI” to a detector.

A quick note: simply swapping words for synonyms with a thesaurus won’t cut it. Detectors are smart enough to see past surface-level changes. True humanization comes from changing the rhythm, voice, and soul of the text.

Method 2: The Best AI Humanizer Tools According to Reddit

When you’re producing content at scale, manual rewriting isn’t always feasible. This is where AI humanizer and paraphrasing tools come in, offering a faster alternative. But be warned: the market is a minefield. Some tools mangle your text into gibberish to fool detectors, while others produce clean copy that still gets flagged. Reddit communities, full of power users, are constantly testing these tools. Here’s what they’re saying:

- StealthGPT: A crowd favorite on Reddit, StealthGPT is often praised as a reliable all-rounder for bypassing most detectors, including the formidable Turnitin. Users love its different modes, though some mention it can occasionally over-simplify complex topics.

- Undetectable AI: Frequently discussed, but with a “your mileage may vary” warning. While it offers granular control, its performance against top-tier detectors like Originality.ai can be inconsistent. To achieve a “human” score, it sometimes sacrifices grammar, requiring a heavy manual clean-up.

- Hix Bypass: A popular choice for humanizing shorter content like emails or social media posts. Reddit users find its interface easy to use, but note that its effectiveness can dip with longer articles, and it sometimes adds fluffy, unnecessary sentences.

Beyond Articles: Humanizing Your Voice on Reddit Itself

The principles of sounding human aren’t just for blog posts. They’re even more critical on the ultimate social battleground: Reddit. In communities where a generic or robotic comment gets downvoted into oblivion, authenticity is everything. Whether you’re a non-native speaker, facing writer’s block, or just want to contribute high-value comments quickly, crafting the perfect response is a real challenge.

This is where an AI co-pilot designed *for* the platform makes all the difference. Pilot for Reddit is a browser extension that acts as your personal authenticity coach. Instead of just spinning words, its ‘Style Reply’ feature lets you generate comments with specific, genuine tones like ‘Humorous’ or ‘Deep Analysis,’ ensuring your voice fits the subreddit’s culture perfectly. With a ‘One-Click AI Reply,’ you can instantly overcome writer’s block with a well-structured, natural-sounding comment that’s ready for your final touch. It’s the perfect way to elevate your presence and earn more upvotes without losing your voice.

Ready to contribute comments that get noticed? Install the free Pilot for Reddit extension and transform your engagement today.

For Students: A Defensive Guide to Bypassing Turnitin

For students, an AI flag from Turnitin isn’t just an inconvenience; it can have serious academic consequences. The conversations on Reddit reveal a clear consensus: the best strategy isn’t about hiding, it’s about being able to prove your work. Think of this as building your alibi *before* you’re ever accused.

Proactive Defense Strategies

- Version History is Your Best Friend: This is the number one recommendation. Write your paper in Google Docs or Microsoft Word with OneDrive enabled. The detailed version history creates an undeniable audit trail of your writing process—something pure AI generation can’t fake.

- Pre-Screen Your Own Work: Don’t wait for your professor to be the first to check. Run your final draft through a public AI detector to find and rewrite any sentences that might trigger a false positive.

- Use AI as an Assistant, Not an Author: The safest and most ethical workflow is to use AI for brainstorming, outlining, or summarizing research. Then, write the actual paper yourself. This process is completely defensible.

Reactive Defense Strategies

- Stay Calm and State Your Case: If you’re flagged, don’t panic. Calmly and firmly assert that the work is your own.

- Show, Don’t Just Tell: This is where your prep work pays off. Present your version history, early drafts, and research notes. This mountain of evidence demonstrates a clear process of human effort.

- Question the Accuser (Politely): Remind your instructor of the known inaccuracies and biases of AI detection tools, especially for technical writing or non-native English speakers. Offer to discuss the paper’s content in detail to prove your mastery of the subject.

Reddit’s Best Practices for Bypassing AI Detection

After sifting through countless threads and debates, a few golden rules emerge from the Reddit community as the most effective, long-term strategies for navigating the world of AI detection.

- The Hybrid Approach Always Wins: Relying on a single method is a rookie mistake. The most successful creators use a workflow: generate a first draft with AI, refine it with a humanizer tool, and then perform a final, thorough manual polish to inject personality and fix any lingering awkwardness.

- Treat Detectors as Flawed but Necessary Tools: While Reddit users love to point out the unreliability of detectors, they almost universally agree on one thing: you should still use them for a final check before publishing or submitting anything important. Think of it as a final spell-check for robotic language.

- The Human-in-the-Loop is Non-Negotiable: The most powerful recurring theme is that AI should be your co-pilot, not the pilot. Blindly publishing unedited AI content is not only risky but almost always results in mediocre, forgettable work. Your judgment is the final, most important ingredient.

The Future of AI Content and Authenticity

The arms race between AI generation and detection is just getting started. As AI models grow more sophisticated, their writing will become nearly indistinguishable from ours. In response, detection tools will evolve, looking beyond the text to analyze other signals of human effort.

In this coming landscape, flooded with machine-made content, the most valuable and truly “undetectable” quality won’t be a clever turn of phrase. It will be genuine authenticity. Your unique experiences, your distinct perspective, and your ability to create a real emotional connection are things that can’t be easily replicated. The ultimate goal, then, shouldn’t be just to *sound* human, but to use AI as a tool to amplify our most human qualities, creating content that is not only undetectable but unforgettable.

Read also: 7 Genius Psychology Hacks to Skyrocket Your Reddit Karma